AI for climate modelling

Machine learning for better and faster climate models. Global climate models aim to represent the key physical, chemical, and biological processes of Earth's climate. As such, these models are essential tools to conduct policy-informing climate change simulations and to understand paleo-climates. A caveat is that climate models are extremely computationally expensive and therefore require the fastest available supercomputers. However, for many types of simulations, even those supercomputers are still not powerful enough to globally resolve several important climate processes (e.g. convection, clouds, atmospheric chemistry). The relatively coarse spatial resolution of climate models (typically between 0.5 to 2.5º lat×lon) also limits their usefulness in informing societies on truly regional to local changes in climate.

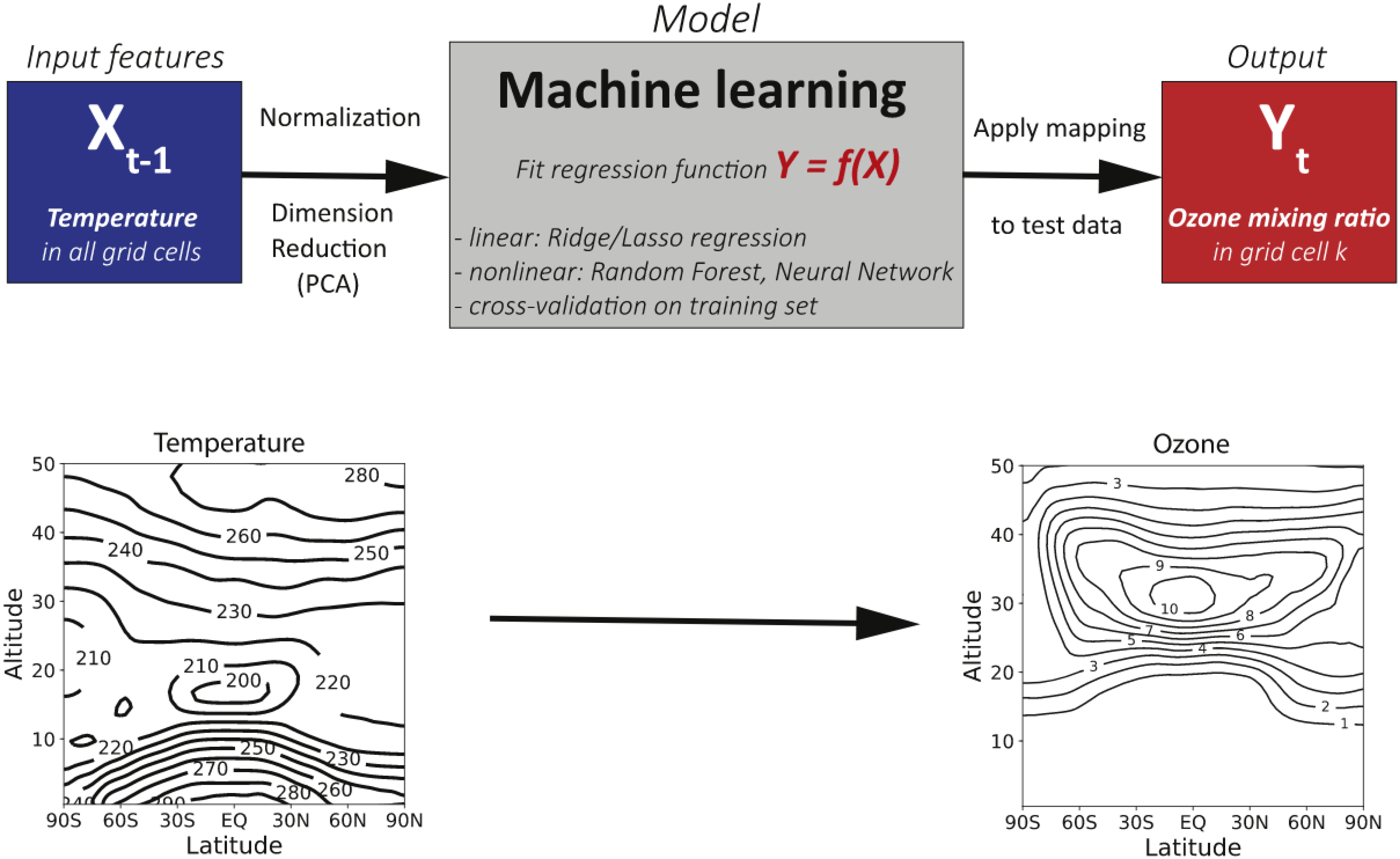

Machine learning techniques  have the potential to make climate models better, faster and to reduce their high energy consumption. For example, our group works on new machine learning parameterizations to replace computationally expensive but important climate model components. The principal idea is to formulate the underlying process dependencies as a regression problem, see figure on the right from Nowack et al. ERL 2018 for a sketch of such a regression formulation, in which we suggested a parameterization to emulate variability and trends in Earth's ozone layer based on atmospheric temperatures. This is important, because changes in the ozone layer can, in turn, feedback onto Earth's climate through radiative interactions. Machine learning allows us to learn these often complex and high-dimensional dependencies as to ultimately replace the underlying equation systems that could otherwise only be solved step-wise with expensive numerical methods.

have the potential to make climate models better, faster and to reduce their high energy consumption. For example, our group works on new machine learning parameterizations to replace computationally expensive but important climate model components. The principal idea is to formulate the underlying process dependencies as a regression problem, see figure on the right from Nowack et al. ERL 2018 for a sketch of such a regression formulation, in which we suggested a parameterization to emulate variability and trends in Earth's ozone layer based on atmospheric temperatures. This is important, because changes in the ozone layer can, in turn, feedback onto Earth's climate through radiative interactions. Machine learning allows us to learn these often complex and high-dimensional dependencies as to ultimately replace the underlying equation systems that could otherwise only be solved step-wise with expensive numerical methods.

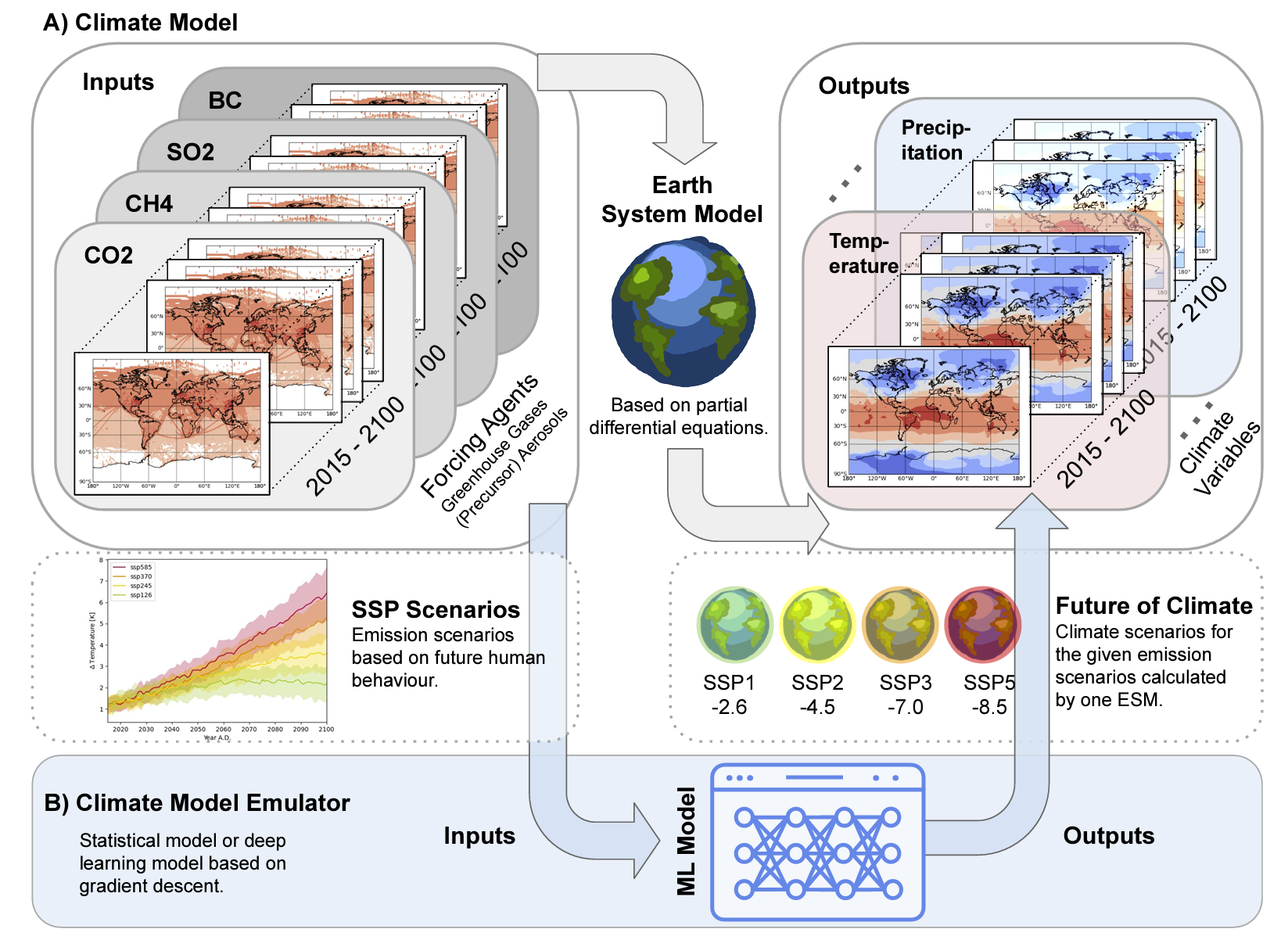

In addition, we work on machine learning-based climate emulators.

This is illustrated in the figure on the right (from Kaltenborn et al. NeurIPS 2023): first, an Earth system model, or climate model, simulates several climate change scenarios under varying scenario-dependent inputs of changes in greenhouse gas and aerosol emissions. The climate model will estimate the corresponding impacts on key variables such as regional surface temperature and precipitation (part A). In B, we then aim to avoid further expensive simulations run on supercomputers by learning the relationships between inputs and outputs from these already existing simulations stored in big data climate modelling archives. The resulting emulator systems allow for the calculation of many climate policy scenarios and their implications, far more than is possible with state-of-the-art climate models.

Selected publications:

(1) Nowack et al. Using machine learning to build temperature-based ozone parameterizations for climate sensitivity simulations. Environmental Research Letters (2018).

(2) Nowack et al. Machine learning parameterizations for ozone: climate model transferability. Conference Proceedings of the 9th International Workshop on Climate Informatics (2019).

(3) Kaltenborn et al. ClimateSet: A large-scale climate model dataset for machine learning. Thirty-seventh Conference on Neural Information Processing Systems (NeurIPS), Datasets and Benchmarks Track (2023).

(4) Mansfield et al. Predicting global patterns of long-term climate change from short-term simulations using machine learning. npj Climate and Atmospheric Science (2020).

(5) Watson-Parris et al. ClimateBench v1.0: A Benchmark for Data-Driven Climate Projections. Journal of Advances in Modeling Earth Systems (2022).

(6) Nowack et al. A large ozone-circulation feedback and its implications for global warming assessments. Nature Climate Change (2015).